FATA #2 / Big Data- NoSQL

[FATA] — From test automation to architecture article series

NoSQL describes non-relational databases.

Differences Between SQL and NoSQL Databases

SQL

Relational databases are structured and have predefined schemas, like phone books that store phone numbers and addresses.

SQL is used for relational databases which stores data in tables with fixed columns and rows.

NoSQL

Non-relational databases are unstructured, distributed, and have a dynamic schema, like file folders that hold everything from a person’s address and phone number to their Facebook ‘likes’ and online shopping preferences.

Differences

What to choose ?

CAP (Consistency, Availability, Partition) theorem — states that any distributed system can have at most two of the following — consistency, availability, partition.

- CP — can’t deliver availability,, the system has to shut down the non-consistent node until the partition is resolved.

- CA — can’t deliver fault tolerance.

- AP — can’t deliver consistency

Advantages of NoSql

- Flexible schemas

- Horizontal Scaling

- Hight Availability

- Fast Queries

- Open Source

Disadvantages of NoSQL

- No standardization rules

- Limited query capability

- It does not offer consistency when multiple transactions are performed simultaneously

- Unique value as key maintenance becomes difficult

- Don’t support ACID

- NoSQL can be larger than SQL

Types of NoSQL Databases

- Document — MongoDB, CouchDB — value — JSON, XML, test

- Key-value — Redis, Aerospike, Memcached — value is BLOB object

- Column — HBase, Cassandra, Accumulo

- Graph — Neo4J — nodes and edges.

https://db-engines.com/en/ranking

MongoDB

MongoDB is an open-source, document-oriented db that stores data in the form of documents (key and value pairs)

- Ad hoc queries support because MongoDB indexes BSON documents and uses the MongoDB Query Language (MQL)

- Indexing

- Sharding — process of splitting larger dataset across multiple distributed collection,

- Load Balancing — replication and sharding

- Replicated file storage over multiple servers

- Data aggregation

- Hight performance

- Large Media Storage

MongoDB stores documents in a format called BSON (Binary JSON) has a similar structure to JSON but is inteded for storing large number of documents.

Amoung of send data to MongoDB can be limited using a projection.

Indexes

Indexes support the efficient execution querirs in MongoDB, MongoDB must perform a collection scan, for example, scan every document in a collection to select those documents that match the query statement. If an appropriate index exists for a query, MongoDB can use the index to limit the number of documents it must inspect.

db.collection.createIndex( { name: -1 } )Types:

- Single Field

- Compound

- Multikey

- Text

- Hashed

- Sparse

- Partial

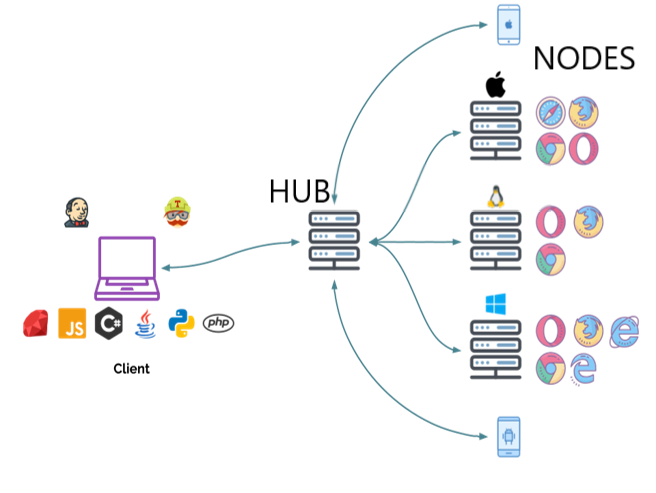

MongoDB Architecture

MongoDB Storage

MongoDB:GridFS

GridFS is a versatile storage system that is suited to handling large files, such as those exceeding the 16 MB document size limit.

MongoDB:Sharding

Sharding is a method for distributing data across multiple machines. MongoDB uses sharding to support deployments with very large data sets and high throughput operations.

MongoDB:Replication

A replica set in MongoDB is a group of mongod processes that maintain the same data set. Replica sets provide redundancy and high availability, and are the basis for all production deployments

HBase

Apache HBase is a non-relational distributed data store that is built on top of the HDFS and is also a part of the Hadoop ecosystem.

HBase was released as an open-source implementation of Google’s Bigtable.

HBase has the following features:

- Distributed storage: Apache HBase is a distributed, column-oriented database that is built on top of the HDFS. It allows data to be stored and processed in a distributed manner.

- Flexible schema: HBase does not follow any strict schema, i.e., you can add any number of columns dynamically to an HBase table. HBase columns do not have any specific data type, and all the data in HBase is stored in the form of bytes.

- Sorted: HBase records are sorted by RowKey. Every HBase RowKey must be unique, i.e., no two rows can have the same RowKey.

- Data replication: It supports the replication of data across a cluster.

- Faster lookups: HBase stores data in indexed HDFS files and uses HashMap internally. It also allows random access to the data. This enables faster lookup.

- Horizontal scalability: HBase is horizontally scalable; this means that if the clusters require more resources, HBase can scale up according to the need. HBase can horizontally scale up to thousands of commodity servers.

- Apache HBase is linearly scalable.

- Moreover, it provides automatic failure support.

- It also offers consistent read and writes.

- We can integrate it with Hadoop, both as a source as well as the destination.

- Also, it has easy java API for the client.

- HBase also offers data replication across clusters.

Note: HBase is not optimised for joins since there are no relations in HBase.

HBase Data Model

HBase Architecture

HBase Architecture is basically a column-oriented key-value data store and also it is the natural fit for deploying as a top layer on HDFS because it works extremely fine with the kind of data that Hadoop process.

Moreover, when it comes to both read and write operations it is extremely fast and even it does not lose this extremely important quality with humongous datasets.

Components:

- HBase Regions — contains all the rows between the start key and the end key assigned to that region.Each region contains the rows in sorted order, region has a default size of 256MB, Region server — group of regions

- HMaster — handles a collection of Region Server that resides on DataNode.

- Zookeeper acts like a coordinator inside HBase, every region sends a continuous heartbeat to Zookeeper and it checks which server is alive and available.

- META table — is a special HBase catalog table that maintance a list of all the Regions servers in the HBase storage system.

HBase Use cases

- Internet of Things

- Product catalogs and Retails Apps

- Messaging Apps

- Social Media Analytics Engines

Most common commands

create ‘employees’, {NAME => ‘personal_data’, VERSIONS => 2}, {NAME => ‘professional_data’, VERSIONS => 4}

put ‘employees’,’1',’personal_data:surname’,’khimin’

put ‘employees’,’1',’personal_data:age’,21

scan ‘employees’

count ‘employees’, {FILTER => “SingleColumnValueFilter(‘personal_data’, ‘age’, <,’binary:40')”}

disable ‘employees’

drop ‘employees’

Cassandra — Lady of NoSQL

Cassandra is a highly scalable, high-performance distributes database designed to handle large amounts of data across many commodity servers, providing high availability with no single point of failure.

Strengths:

- Good for heavy write workloads, writes are cheap

- Data distribution across multiple data centers and cloud availability zones.

- Replication

- In combination with Spark, Cassandra can be a strong “backbone” for real-time analytics. And it scales linerly. If you anticipate the growth of real-time, Cassandra definitely has utmost advantage here.

Limitations:

- No subquery support for aggregation, this is by design.

- All data for a single partition must fit on disk in a single node in the cluster.

- It’s recommended to keep the number of rows within a partition 100k items and the disk size under 100 MB

- A single column value is limited to 2 GB (1MB is recommended.)

Features:

- Open-source

- Familiar Interface aka SQL — familiar to CQL (Cassandra Query Language)

- High Performance — primary replica performs read and write operations, while secondary is only able to perform read operations.

- Activate Everywhere / Zero-downtime — data replicated across cloud env.

- Scalability — enables to scale horizontally by simply adding more nodes to the cluster.

- Seamless Replication

Use cases:

- IoT

- User activity tracking and monitoring

- Messaging apps — fast data writing and reading.

- Fraus detection and spam monitoring —

Data Structure model

In Cassandra, data is stored as a set of rows that are organized into tables. Tables are also called column families. Each row is identified by a primary key value. Data is partitioned by the primary key.

Components:

- Keyspace — define container for replication

- Table

- Columns

Cassandra Ring Topology

Gossip Protocol — It is a peer-to-peer communication protocol in which nodes periodically exchange state information about themselves and other nodes they know about.

Snitching

- They teach Cassandra enough about network topology to route requests efficiently.

- They allow Cassandra to spread replicated around your cluster to avoid correlated failures.

Cassandra Storage Engine

Memtable — it is in-memory structure where Cassandra buffers writes.

Interview questions:

- https://www.guru99.com/cassandra-interview-questions.html

- https://data-flair.training/blogs/cassandra-interview-questions/

- https://tekslate.com/cassandra-interview-questions-answers

References: